How E.U. Directive 2024/1760 Affects Your Cyber Risk

Profile

Why does the E.U.’s latest update to its’ existing sustainability regulations impact in cyber security and risk management terms?

On 25 July 2024, the Directive on corporate sustainability due diligence (Directive 2024/1760) entered into force, with the objective being in promoting in-scope entities behaviour in a sustainable and responsible manner across their operations and throughout their global value chains. An amended version has had an effective date of 17th October 2024.

The rules seek to ensure that organisations identify and address adverse human rights and environmental impacts of their actions both within and external to the European domain.

Scope

The regulation does not apply universally, but rather, to large EU limited liability companies & partnerships:

+/- 6,000 companies – >1000 employees and >EUR 450 million turnover (net) worldwide.

It also however, applies to large non–EU companies:

+/- 900 companies – > EUR 450 million turnover (net) in EU.

Micro companies and SMEs are not covered by the proposed rules, but they are included where SMEs could be indirectly affected as business partners within value chains.

Compliance Costs

The costs of establishing and operating the due diligence process fall to the entity, including the transition costs, which is on top of the expenditure and investment required for adaptation of operations and value chains in order to meet the due diligence obligation, where this is also required.

Double Materiality Concept

One difference to other and earlier sustainability regulation is the double materiality concept, which is a requirement to “report both on the impacts of the activities of the undertaking on people and the environment [impact materiality], plus on how sustainability matters affect the undertaking [financial materiality].

Financial materiality refers to sustainability-related matters that could present financial risks, or opportunities for an undertaking. ESRS 1, Section 3.5, states that “[a] sustainability matter is material from a financial perspective, if it triggers, or could reasonably be expected to trigger, material financial effects on the undertaking.”

It is here that cyber resilience and technology risk controls come into play in the same manner as for financial statement line items (FSLI’s).

Where there is any question over the validity and accuracy of the quantitative measures expressed by an entity in its’ non-financial reporting requirements under the new regulation, then external auditors will not be in a position to sign off the organisation’s accounts with reasonable assurance.

Audit Requirements

The European Sustainability Reporting Standards introduce an extensive set of sustainability disclosures for in-scope companies and since the double materiality assessment required under these standards considers both financial effects and impacts, then there must be robust data and documentation, with sufficient transparency of their approach in determining input values. Further, as with financial reporting, the process utilised has to be repeatable for subsequent years.

SEC Equivalence

Whilst this is an EU regulation that applies to organisations external to the EU geography, as per GDPR, NIS2, PSD2, etc, there is a provision within the regulation where the Securities and Exchange Commission’s (SEC’s) climate-related disclosure rules are equivalent, or greater than the EU regulatory provisions.

However, the SEC’s regulations are on hold while court challenges are heard. Having said that, companies need to prepare for the possibility that some, or all parts of the rules, will indeed come into effect.

Additionally, there are a growing number of US states, as well as other countries that are requiring similar disclosures, which can include quantitative and qualitative measures of operational impacts as well as a defined measure of progress toward sustainability goals to be made within non-financial reporting rules for accounts filed.

The Broader Impact of Non-Compliance

Why compliance is so important is that beyond financial penalties and the potential imprisonment of management in France, failing to comply with CSRD can have broader implications, including, but not limited to:

Reputational damage leading to loss of trust among investors, customers, and other stakeholders.

Operational disruptions resulting from the penalties imposed, thereby impacting free cashflow and overall capital availability for investment

Additionally, the increased associated scrutiny may disrupt business operations, again diverting resources away from core activities, in order to remediate compliance issues.

External auditors will also seek to examine financial records in far greater detail, along with operational process audits, thereby raising audit costs substantially, which for the in-scope corporate size will be in terms of millions of dollars/euros/etc.

Competitive disadvantage may also result from non-compliance, where competitors may be viewed as being more transparent and committed to sustainability, as well as having lower future non-compliance risks for capital markets and investors.

Software Tools for Compliance

At present, another challenge for organisations is the lack of off-the-shelf products to ease the transition into a compliant entity.

Whilst for Sarbanes Oxley (SOX) SAP, a major player for these in-scope organisations, has SOX compliance modules in-built, as well as there being a number of different third-party vendors offering add-on applications for SAP to assist SOX compliance (Zluri, Lumos, Okta, Saviynt, amongst others), there is an absence of a similar product at this time. As such, many companies have had to develop their own proprietary applications to suit their individual operations.

Targets for Ecoterrorists & Environmental Groups

It may also be the case that increased regulations for sustainability objectives may provide certain groups with the motivation they need to utilise cyber attacks to seek out data to prove non-compliance, manipulate records to ensure audits reveal non-compliance, or undermine and AI/LLM models utilised in the assessment and quantification process by a target organisation.

There has been a shift in tactics by environmental groups; away from direct action and towards cyber-warfare, utilising the dark web to employ those with the requisite skills to exfiltrate, corrupt, or destroy data.

Further, within the existing cyber threat environment, data has highlighted that cyber attacks are higher in countries and US states that have more onerous and punitive data privacy laws that for those that do not. The same pattern may well repeat itself here, where mal-actors believe (rightly or not), that financial penalties, the risk of management imprisonment, greater regulatory oversight and audit examination, will offer the same financial payout as with prior ransomware attacks.

On this, we will need to wait.

Hierarchical Considerations in Cyber Risk Assessments

With the widespread adoption of AI/large language models across sectors & geographies, there has been a recent shift in the regulatory environment due to the perceived threats to personal data/privacy; exacerbated by the current geopolitical climate.

Other changes within the cyber domain manifested themselves prior to the, as well as the Crowdstrike global outage that has changed mindsets across different cyber domains & within organisations.

Organisational aspects now have a greater level of importance attached, with the ISO27001:2022 & NIST CSF V2 being prime examples. These now have training, data governance/stewardship as a key focus within cyber risk controls.

With 2 fundamental approaches to cyber security/risk management; top-down & bottom-up, the introduction of emergent technologies has created the need to encompass the new regulatory & standards requisites for all organisations.

By co-incidence, I was invited to write a chapter on the organisational & data flow changes that these 2 facets entail, within the newly released book entitled “Volume V Cybersecurity Risk Management” published by de Gruyter.

My contribution is entitled “Hierarchical considerations in cyber risk assessments: Strategic versus operational prioritization in managing current and emergent threats”.

Working with IT personnel from some national brand entities at the end of last year & into this created a realisation that traditional organisational structures & lines of communication, data provision for strategic cyber threat controls were no longer appropriate.

Some large-scale organisations have reformulated their hierarchies & governance in light of the step-change impact of AI/LLM’s, which I have included in this current work.

https://www.degruyter.com/document/doi/10.1515/9783111289069-002/html

Crowdstrike – a Lesson to All?

The Crowdstrike update resulting in the worlds’ largest IT outage to date has generated many comments, but the impact creates not just a number of questions about the company, but also several lessons across multiple sectors; some more obvious than others.

The very first question for Crowdstrike is how did their enforcement of SDLC fail so badly when it is such a fundamental IT general control. How did that code move from the development to production environment without sufficient testing? A follow-on, less obvious question is whether Crowdstrike utilises offshore, or hybrid software developers; either offshore-owned, or as contractors. This is a cost-efficient mode of development utilised by most large entities

The next major one, is whether the company is certain that this was not a cyber-related failure arising from AI hallucination & the use by developers of LLM’s for their daily code work. This is more common than one might think & controls on offshore developers is notoriously difficult, but cost reductions are driven by ownership structures (in this case it is a listed entity & thus needs to generate dividends).

In terms of lessons, which cyber re/insurer/CRQ company’s models have been the closest in terms of predictive accuracy as to financial losses? How to embody such incidents within T&C’s for everybody’s clarity when such impacts from AIH may be classed cyber-related losses?

Another major lesson falls to how to manage vendor risks, given it was caused primarily by MS365/Teams cloud-based applications impacted by a third-party cloud security vendor?

Thales 2022 report highlights that due to a lack of specialist cloud security resources globally, over 70% of entities rely upon either the cloud provider, or a third-party vendor for their cloud security. The limited number of vendors exacerbates the issue.

Finally, models need to account for such cascading impacts within IT outage models. Certain industries are well-versed in this, but risk carriers/CRQ vendors focus upon cloud outages, as opposed to non-cyber IT incidents. This may change as a result of the Crowdstrike event.

“What has Texas and judgment preservation insurance got to do with my AI, quantum & cyber risk management programs?”

To explain the implications, we need to understand that there is a current patent goldrush to file applications relating to AI, quantum & graphene chip technology, allied to a new option for patent owners to monetise their portfolios.

To explain the implications, we need to understand that there is a current patent goldrush to file applications relating to AI, quantum & graphene chip technology, allied to a new option for patent owners to monetise their portfolios.

Pre-2013, non-practising entities, labelled trolls (Intellectual Ventures, Acacia Research, RPX, etc) hoovered up patents to monetise them since it was relatively easy to secure damages, or more commonly, simply sell portfolios as part of overall litigation risk control.

Alice V CLS bank in 2013 changed patent application validity by excluding methodology patents; these underpinning software & instead required applications to prove a clear technological improvement.

With the aforementioned technologies, there is a clear link between such tying methods to technology, hence the application surge in an area of criticality; as evidenced by government mandates to secure emerging technologies for domestic use/protection asap.

In the meantime, following the demise of the patent troll ecosystem, patent owners faced severe financial obstacles in asserting their IP rights, with $mlns required to battle against tech behemoths, with a high risk of their patents being invalidated upon appeal.

Over the past 24 months, the re/insurance sector has created an ability for smaller firms to take on the giants through the provision of judgment preservation insurance, whereby a patent holder pays $x million as a premium to secure $y000 million Mln damages through the courts. This has made litigation for smaller entities with key technology-related patents a viable option.

Allied to this, the east & west Texas courts have had a high percentage of patent owner cases confirmed against the plaintiffs, thereby making any litigation documentation relating to the Texas courts a warning for recipients.

With cases such as Apple V Masimo; Google V Singular; Cloudera v StreamScale, vendor risks from both direct cessation of supply, to being joined in litigation under indirect patent infringement via 35 U.S. Code § 271, such risks require greater due diligence during purchasing/use decision milestones.

Vendor lock-in risk management is one element within any cyber/AI risk assessment exercise, but how many organisations check the likelihood of service/product interruption arising from the new environment of increased litigation; especially within the Texas courts? Check your vendor’s patents as part of your IT/cyber/AI/quantum risk management exercise & identify patent owners who may pose a risk to your continued use before product/service dependency becomes costly to overcome.

Mitigation Through Risk Transfer – Still Valid as an Option?

Is cyber risk transfer through financial instruments still a valid mitigation/strategic option?

- Many US states have now made payments for ransomware attacks illegal;

- The Merck settlement took over 5 years & with war exclusion clauses in place, eliminating cover for state-sponsored attack impacts, what other considerations are required?

- Those States that have public laws against ransomware payments have a lower incidence that others;

- Entities stating they have substantially increased ITSEC spend have been targeted less than those who do not make such public statements;

- Cyber re/insurance premiums increased 50-70% over the past 12 months;

- Attachment points are high & most cover is EoL or with low limits (the Yahoo hack of 2016 cost over $175 million; higher levels are needed).

- ILW/ILS major announcement deals numbered 4 in 2023 – those pushing for ART via financial instruments are within the financial markets, or VC-funded CRQ entities desperate to find a pathway out from a high burn rate;

- AI/post-quantum cryptographic vulnerabilities mean zero data & no experience in what will occur when mal actors leverage these areas & risk carriers have no idea what individual, aggregated or sectoral impacts they will undoubtedly have.

What has the highest levels of cyber breach/vulnerability compromise? Human error.

Years of data amply demonstrates the cost-benefit of training & education.

Allocate budget to ITSEC & training for the best bang-for-buck & forget ILS/ILW – look to captive options if there is a pressing mandate to use a financial instrument to mitigate cyber exposure.

Allocate capital to ITSEC & personnel up-skilling, rather than face years of litigation arguing if a carrier should pay out or not – look at the Covid-19 BI claims history for clear evidence of what your organisation’s cyber resilience needs.

Vendor Risks Are Higher With AI Trends

Cyber security covers many areas, with most aspects being tech/ legal/contractual / protection based.

However, where is a background check within assessment frameworks; funding, location – e.g. in an anonymous LLC State?

Importantly, with an AI/quantum patent goldrush, due diligence is required in relation to patents (1st filing date); any assignment to another entity.

Software/service risks emanating from patent ownership are not generally identified as belonging within vendor selection risk assessments.

The Cloudera case highlights continuity of supply risks in the face of a small entity owning patents, certified software code, trade secrets, etc. Where a small entity is able to successfully assert against a major provider, as we have seen with the Apple smartwatch case, there is an associated capability to force the major player to cease a supply.

The quickest way for non-legal, more IT-focussed personnel involved in the initial vendor selection process is to simply undertake the following actions:

1. Funding: is the entity VC funded – if yes, the objective of a VC is to generate profits via divestment, which may include termination of an entity’s core offering if, for example, the client base/access is the rationale for the acquirer: use the likes of Crunchbase to identify funding sources;

2. Use Google’s patent search for the company’s patent filings & the USPTO’s patent search. The latter will provide detailed information on patent application documentation, such as the examiner’s search results that will identify potential patent assertion risks from competitors who own patents;

3. Ensure that products or services used from a vendor do not have your organisation’s name, reference as a user, or any other reference in order to avoid any potential risk as a contributory infringer under U.S.C. § 271;

4. Require within pre-contract documentation to disclose a list of owned patents, as well as any assignments of them. This should also list failed, or abandoned patent applications, which should then be the focus of further investigation as to why an application did not continue to allowance.

As we move deeper into the AI domain, followed by quantum, all entities relying upon an external provider should add patent infringement to their core vendor selection process.

Within our cyber risk quantification segment, the number of patent holders is small, making it an easy task for risk carriers to identify the risks of using, or being associated through vendor press releases & social media posts, from contributory infringement actions.

Within AI, as a segment with high velocity, the task is far more difficult – it should not fall purely to an organisation’s legal, or IP department to second-guess the selection of hardware, software, services being considered by IT security departments.

Is Cyber an Insurable Risk?

Allianz’s Risk Barometer 2024 raises a crucial point: is cyber still an insurable risk?

According to Allianz, increasing doubts create the need for expanding the role of cyber insurers away from being just about paying claims and instead leveraging risk expertise to promote prevention/preparedness to enhance resilience.

Such a collaborative approach was first seen in cyber insurance way back in 2000, with German re/insurer working in conjunction with German IT Security company Secunet, who provided ITSEC auditing as part of the underwriting process, as well as post-breach claims services (ensuring security patches had been applied in a timely manner, etc), and as such the Allianz approach can hardly be seen as innovative.

What is important however, is that there is recognition that every organisation differs due to their IT systems, topologies, operational processes, risk appetite, cyber security maturity levels, making peering for cyber risk financial quantification a fallacy.

Rather, the way forward, as proposed in the Allianz report, is working on an individual basis per client in understanding the management’s business objectives and strategies at the C-suite level, followed by how these imperatives cascade down into the entity all the way down to the highly specialised skills in securing the organisation concerned.

This has become increasingly the case, with AI L-L model roll-out globally en-masse across sectors, with a complete lack of threat vector/attack-surface/claims experience from which to model innovative new cyber threats, which may range from AI model compromise, to quantum cryptographic brute force attack impacts on encrypted proprietary data.

For those risk carriers relying upon prior CRQ models, or investors for ART ILS/ILW types of financial instruments, the emergent technologies from the past 12 months, coupled with emerging ones, from commercially viable graphene chip technology for neural network accelerator chips for example, are basing their risk assumptions on post-dated, already flawed models.

Quantum Cryptographic Compromise – What Next?

Microsoft’s integration of OpenAI’s chatbot into its’ Win11 OS & MS keyboard key change raises a number of issues, ranging from

- Cyber security

- GRC

- ERM

- ESG

- Legal

- Anti-trust

In turn:

The cyber security risks caused by AI package hallucination for developers using code repositories is already maturing, for those unfamiliar with this risk, put simply, coding issues can be shortcut by a developer asking a GenAI engine for a solution. The engine refers the developer to a fake location created by a mal actor asking the same question & depositing infected code at that referral point. The developer integrates the code as a fix;

Governance issues arise from the use of external data to an organisation & the sheer volumes used in GenAI models, which in turn causes data compliance risks to organisations. An output from a GenAI engine that causes a subsequent regulatory breach may open an organisation up to not just being found in breach or not, but consequent increased external audit costs, with governance, ITGC’s & data privacy laws being of higher concern & thus deeper inquiry by auditors, with at least 1-years’ uplift of audit costs, potentially costing $ millions in additional time-costs;

ERM risks are multi-facetted, but merely taking strategy & policy into account, how will entities who do not wish their employees to use chatbots at work actually control & enforce this? – managers/execs are already using OpenAI’s Whisper AI in conjunction with Google’s Collaboratory to transcribe voice to text post-meetings, especially in time-constrained or multi-language situations. What strategy is required in adopting or rejecting AI within organisations & what policies will be required?

ESG arises from how entities will square the circle of expressing ESG policies, whilst utilising GenAI products, such as Office365, Win11, within the working environment that reply upon GenAI model training is lowest cost 3rd world countries, especially in Africa, essentially locking them into continued low-paid work whilst the owners of the technology build increasing profits;

Legal issues remain unresolved at this point as to legal liability for decisions based upon GenAI engine outputs. Along with IP cases relating to copyright and the potential for contributory infringement by users of a GenAI engine.

Anti-trust raises past cases of Microsoft bundling their Explorer browser into versions of Windows, which was found to be illegal across multiple territories.

We are still finding out way with AI in its’ newest manifestation & ongoing questions/issues will task every major decision maker; from commerce, to governments & beyond.

https://www.reuters.com/technology/microsoft-adds-ai-button-keyboards-call-up-chatbot-2024-01-04/

Quantum Cryptographic Compromise – What Next?

2024 & another insidious cyber threat to contend with for all organisations comes in the form of quantum computing cryptographic threats. This has long been known, with NIST, for example, forming future encryption protocols via open discussions since 2016 & the final discussion terminating in Q4 of 2023.

Previously, the threat was viewed as a medium-to-long-term one & thus not in need of immediate address. However, as with generative large language model deployment & their associated threats last year, the 15-then10-then5 year timeline for a post-quantum cryptographic environment has changed become a present-day threat.

Encryption forms the backbone of all computing environments; ATM machines, internet banking, social media platforms, military communications; missile guidance systems, etc, & thus critical globally.

Taking a real-world example, in 2022, the Zuchongzhi 2.1 quantum computer completed a task in 4 hours that would have taken a current supercomputer 48 000 years to achieve; this was a 66-qubit quantum computer. In 2023, IBM delivered a 1,121-qubit quantum processor.

Who is concerned with the threat? The US government with the HR7535 Quantum Computing Cyber Security Preparedness Act; the World Economic Forum (Global Risk Institute Quantum Threat Report), NIST, CISA, CSA & finally, venture capitalists.

The latter are betting big on start-ups with solutions to the issue, with the likes of PsiQuantum, Rigetti & ISARA all launching with products to meet the threat, each filing multiple US patents.

How then will quantum computing impact upon existing cryptographic methods? The ability to break encryption algorithms through quantum mechanics using Quantum Fourier Transform is attainable, according to Shor’s factorization algorithm, that proposes polynomial time factorization, rather than exponential time achieved using classical algorithms. In essence, Peter Shor demonstrated that a quantum computer is capable of factoring very large numbers in polynomial time.

During 2023, a number of security product entities & cyber risk modellers jumped on the AI bandwagon, proclaiming their products & models to be using AI where it was absent even a few months earlier.

How then, can such a cyber threat, with global impact potential across so many sectors, be modelled for alternative risk transfer, cyber re/insurance pricing/underwriting when no data currently exists? During 2023, 4 cyber-CAT alternative risk transfer products launched, from the likes of Swiss Re & Beazley as cyber-CAT bonds & cyber industry loss warranties.

How have those VC entities & funders of cyber risk modelling firms for creating ILS/ILW-types of cyber risk transfer products taking into account the insidious quantum computing cryptographic risks that are no longer a future threat, but a very real imminent one according to those who should know?

https://csrc.nist.gov/news/2023/three-draft-fips-for-post-quantum-cryptography

There are an increasing number of standards for IT security. Do you need them for GDPR proof of compliance?

Does your company certify for various areas of operation? Perhaps one or more of Business continuity ISO 22301; Information Security Management ISO/IEC 27000; Risk Management ISO 31000; Environmental Management ISO 14000, or the most popular, Quality Management ISO 9000?

ISO has attempted to keep pace with technological changes, with updates to ISO27000 and 22301 to embody regulatory changes such as the GDPR. Impact assessments and risk assessments are now more clearly matched with data privacy standards. So do you really need to certify your organization to mitigate the risk of non-compliance by proving your organization has maintained the highest IT security standards?

The Challenge

Cases such as those of British Airways and the Marriott hotel group in the UK raise the question of how to prove that your data protection is secure and compliant in order to avoid prosecution for negligence. In the two major UK cases the Regulator (ICO) imposed the financial penalty due to customer data being “compromised by poor security arrangements at the company”. By contrast, in the Marriott case, it was not a failure of existing operations that was the cause of the fine, but that the company had “failed to undertake sufficient due diligence” when it purchased a company which had suffered the cyber-attack(Starwood Hotel Group).

In both instances, the size of the fines was such that the ICO clearly believed the failures were criminally negligent. So how do you demonstrate that your company is not and what evidence will the Regulator accept as proof in rebuttal?

With the GDPR having been in operation since 2018 and the US COPRA only coming into force in January 2020, it is still early for the number of cases brought by a Regulator to be indicative of what constitutes proof of intent to comply and an absence of negligent conduct.

The Solution

Whilst being certified for ISO standards such as 27000 can provide auditable proof of compliance to an accepted security standard, it does not of itself prove that your company’s management of data is compliant. How can this be so? Taking ISO 9000 as a prime example, although the standard sets out what needs to be done in order to qualify for ISO certificate issuance, the level of the management system put in place for certifying against the standard may not be fit for purpose. When compared to another entity with a far more granular approach to their management method, it may be a qualifying system, but not the best.

The same applies to most certification standards and bodies. Proof of certification against one does not provide unassailable proof that there has not been negligence in managing data to the required data protection law standard. It can be indicative of the method, management style and process that can assist a Regulator in determining fault.

The greater the prima facie evidence your company can provide to a Regulator in cases of breach, the greater the likelihood that the Regulator will accept that there was no intent to breach the relevant data protection standard.

Quantar can assist in the provision of auditable proof through the implementation of our CyCalc software solution. This uses client-specific data, external data and actual threat data to extrapolate and quantify business process financial exposures. It also provides “what-if” capability in order to model scenarios and changes made to systems and processes.

Using CyCalc gives clearly demonstrable intent to comply, through being able to identify those risks and their values. The historic data plots over time your security maturity, further strengthening arguments against negligence. In this increasingly regulated environment, such data has become of even greater importance.

Dynamic environments create risks of uncertainty in delivery of products & services. How can you meet the challenge?

Traditional project management methods utilised a waterfall approach whereby the end result was the objective, with all activities, planning and budgeting targeting this final delivery of the specified product/service.

By contrast, Dynamic Systems Development Method (DSDM) was originally labelled as a Rapid Application Development approach. With DSDM Agile, there is a building of prototypes whose future is to be then be evolved into a delivered solution.

Why then would an Agile approach be better suited to data governance and compliance than a more classic project approach – or is it?

The Challenge

Dynamic environments require dynamic approaches to managing the variables within that environment. Data acquisition, processing and extrapolation has undoubtedly been an incredibly dynamic environment for organizations globally over the past 2-3 years.

Meeting the needs of business operations in the face of major competitors out-resourcing your company through better use of data is but one such challenge. Use of big data, distributed ledger, new entrants with lower barriers to entry, cloud migration, data volumes have shifted at an increasing rate. A few global players act as gatekeepers in many instances now.

How then, to keep up or find a product or service and the means of creating and distributing it with sufficient margin to validate a business case in this maelstrom of change?

The Solution

Traditional approaches focus upon business goals and in meeting business objectives. It takes a strategic perspective and considers risks, is there a sufficient business case to proceed, and then imposes a structure in delivering on those components in a final form.

The classical approach requires planning the structure of a project: budget, timescale, work required, resources etc., considering everything at every level of the project. It adds a temporal component, from the short-term, such as what activities are to be executed when and what teams will undertake at specific times, to the long-term, over the course of the project itself.

It can be argued that a classic approach is safer for a company to use as its project and program methodologies, since the account for risk. There are plans for what should happen, but the approach also considers what may happen and plans for it by considering risks, how they can be dealt with and how they can be prevented or minimised.

By contrast, DSDM Agile does not impose a project structure, instead it is a way to think about how the work that goes into the project should be organised and performed, with the work being iterative and incremental, focussing on customer requirements and the product.

DSDM Agile has a product as opposed to a strategy focus and makes a key assumption that the product (or service as a product) will have a worth to the business if it is delivered in the correct manner. With DSDM Agile, the ultimate goal is in ensuring that the product meets the client needs, with the work executed in the most efficient manner possible.

Rather than having a final product as the deliverable and planning how to deliver that final product, in DSDM Agile the product is delivered incrementally, where features are added to the product on a piecemeal basis by incorporating feedback, until all customer requirements are met. In this way, the product at the commencement of the project will not be the final one delivered.

Most people have cases in mind when they think of a project or plan that changed fundamentally from first idea or thought, to what happened on the journey to the end. It is the same in dynamic environments, with constantly shifting client expectations, consumption habits, levels of margin that shift dramatically due to technology.

It is for these reasons that adopting a DSDM Agile approach to projects and programs is most suitable in the present and most likely, future operating environment. Communication with the stakeholders is emphasised over documentation or following a rigid structure. With less planning, adaptability is increased. Where predictability is low, DSDM Agile’s responsive approach can make the difference between delivering a competitive product or service, or one mismatched with customer expectations.

Traditional project management methods utilised a waterfall approach whereby the end result was the objective, with all activities, planning and budgeting targeting this final delivery of the specified product/service.

By contrast, Dynamic Systems Development Method (DSDM) was originally labelled as a Rapid Application Development approach. With DSDM Agile, there is a building of prototypes whose future is to be then be evolved into a delivered solution.

Why then would an Agile approach be better suited to data governance and compliance than a more classic project approach – or is it?

The Challenge

Dynamic environments require dynamic approaches to managing the variables within that environment. Data acquisition, processing and extrapolation has undoubtedly been an incredibly dynamic environment for organizations globally over the past 2-3 years.

Meeting the needs of business operations in the face of major competitors out-resourcing your company through better use of data is but one such challenge. Use of big data, distributed ledger, new entrants with lower barriers to entry, cloud migration, data volumes have shifted at an increasing rate. A few global players act as gatekeepers in many instances now.

How then, to keep up or find a product or service and the means of creating and distributing it with sufficient margin to validate a business case in this maelstrom of change?

The Solution

Traditional approaches focus upon business goals and in meeting business objectives. It takes a strategic perspective and considers risks, is there a sufficient business case to proceed, and then imposes a structure in delivering on those components in a final form.

The classical approach requires planning the structure of a project: budget, timescale, work required, resources etc., considering everything at every level of the project. It adds a temporal component, from the short-term, such as what activities are to be executed when and what teams will undertake at specific times, to the long-term, over the course of the project itself.

It can be argued that a classic approach is safer for a company to use as its project and program methodologies, since the account for risk. There are plans for what should happen, but the approach also considers what may happen and plans for it by considering risks, how they can be dealt with and how they can be prevented or minimised.

By contrast, DSDM Agile does not impose a project structure, instead it is a way to think about how the work that goes into the project should be organised and performed, with the work being iterative and incremental, focussing on customer requirements and the product.

DSDM Agile has a product as opposed to a strategy focus and makes a key assumption that the product (or service as a product) will have a worth to the business if it is delivered in the correct manner. With DSDM Agile, the ultimate goal is in ensuring that the product meets the client needs, with the work executed in the most efficient manner possible.

Rather than having a final product as the deliverable and planning how to deliver that final product, in DSDM Agile the product is delivered incrementally, where features are added to the product on a piecemeal basis by incorporating feedback, until all customer requirements are met. In this way, the product at the commencement of the project will not be the final one delivered.

Most people have cases in mind when they think of a project or plan that changed fundamentally from first idea or thought, to what happened on the journey to the end. It is the same in dynamic environments, with constantly shifting client expectations, consumption habits, levels of margin that shift dramatically due to technology.

It is for these reasons that adopting a DSDM Agile approach to projects and programs is most suitable in the present and most likely, future operating environment. Communication with the stakeholders is emphasised over documentation or following a rigid structure. With less planning, adaptability is increased. Where predictability is low, DSDM Agile’s responsive approach can make the difference between delivering a competitive product or service, or one mismatched with customer expectations.

Traditional project management methods utilised a waterfall approach whereby the end result was the objective, with all activities, planning and budgeting targeting this final delivery of the specified product/service.

By contrast, Dynamic Systems Development Method (DSDM) was originally labelled as a Rapid Application Development approach. With DSDM Agile, there is a building of prototypes whose future is to be then be evolved into a delivered solution.

Why then would an Agile approach be better suited to data governance and compliance than a more classic project approach – or is it?

The Challenge

Dynamic environments require dynamic approaches to managing the variables within that environment. Data acquisition, processing and extrapolation has undoubtedly been an incredibly dynamic environment for organizations globally over the past 2-3 years.

Meeting the needs of business operations in the face of major competitors out-resourcing your company through better use of data is but one such challenge. Use of big data, distributed ledger, new entrants with lower barriers to entry, cloud migration, data volumes have shifted at an increasing rate. A few global players act as gatekeepers in many instances now.

How then, to keep up or find a product or service and the means of creating and distributing it with sufficient margin to validate a business case in this maelstrom of change?

The Solution

Traditional approaches focus upon business goals and in meeting business objectives. It takes a strategic perspective and considers risks, is there a sufficient business case to proceed, and then imposes a structure in delivering on those components in a final form.

The classical approach requires planning the structure of a project: budget, timescale, work required, resources etc., considering everything at every level of the project. It adds a temporal component, from the short-term, such as what activities are to be executed when and what teams will undertake at specific times, to the long-term, over the course of the project itself.

It can be argued that a classic approach is safer for a company to use as its project and program methodologies, since the account for risk. There are plans for what should happen, but the approach also considers what may happen and plans for it by considering risks, how they can be dealt with and how they can be prevented or minimised.

By contrast, DSDM Agile does not impose a project structure, instead it is a way to think about how the work that goes into the project should be organised and performed, with the work being iterative and incremental, focussing on customer requirements and the product.

DSDM Agile has a product as opposed to a strategy focus and makes a key assumption that the product (or service as a product) will have a worth to the business if it is delivered in the correct manner. With DSDM Agile, the ultimate goal is in ensuring that the product meets the client needs, with the work executed in the most efficient manner possible.

Rather than having a final product as the deliverable and planning how to deliver that final product, in DSDM Agile the product is delivered incrementally, where features are added to the product on a piecemeal basis by incorporating feedback, until all customer requirements are met. In this way, the product at the commencement of the project will not be the final one delivered.

Most people have cases in mind when they think of a project or plan that changed fundamentally from first idea or thought, to what happened on the journey to the end. It is the same in dynamic environments, with constantly shifting client expectations, consumption habits, levels of margin that shift dramatically due to technology.

It is for these reasons that adopting a DSDM Agile approach to projects and programs is most suitable in the present and most likely, future operating environment. Communication with the stakeholders is emphasised over documentation or following a rigid structure. With less planning, adaptability is increased. Where predictability is low, DSDM Agile’s responsive approach can make the difference between delivering a competitive product or service, or one mismatched with customer expectations.

It is for these reasons that adopting a DSDM Agile approach to projects and programs is most suitable in the present and most likely, future operating environment. Communication with the stakeholders is emphasised over documentation or following a rigid structure. With less planning, adaptability is increased. Where predictability is low, DSDM Agile’s responsive approach can make the difference between delivering a competitive product or service, or one mismatched with customer expectations.

Having a GDPR program does not mean it will become embedded successfully within an organization

There is a presently emerging in relation to behavioural science labelled “cognitive uncertainty” (Enke & Graeber; Harvard University). This theory seeks to explain why people facing unknown odds as if they were facing ones with greater certainty. This usually manifests itself as believing the uncertainty they face is more 50:50 than perhaps 10:90 against. Enke &Graeber believe that uncertainty creates a lack of confidence in that people may think their sums are not right, they misunderstand or their memory may be failing them when making a judgement.

If this theory is correct, and there is plenty of previous research on judgement and decision making to back it up, then how your company manages data risk and compliance programs may be influenced by it.

The GDPR requires the introduction of business processes that proactively demonstrate compliance, with periodic audits, or spot checks, being no longer sufficient. Personnel face an IT environment that is always changing, as are the methods for acquiring, protecting and processing personal data.

The fines imposed by the ICO (Information Commissioner’s Office) will likely vary, depending on how well a company can demonstrate how it respects personal data and the efforts that have been made to protect it.

Thinking that your programs ensure compliance and that the chances of there being an error are less than 50:50 is not the same as actually quantifying with hard evidence what the probability really is. At the same time, since the GDPR continues to evolve and Regulators approach the requirements for compliance, there will be a need to account for this moving target.

So how does a company ensure that they comply on an ongoing basis and are there any tips to assist in this?

1. Check your relevant Regulator’s website in a scheduled and documented manner, since each has grown month on month with supporting documentation and information on what changes are or will be implemented.

2. Every company has to demonstrate that they understand their responsibilities, have accountability, processes and governance in place, but so often STEWARDSHIP is totally omitted from the data and regulatory compliance process. Make sure that you understand and have a data stewardship program coupled and providing input into your GDPR program.

3. Create documented records of the development and use by your company of the intelligence and sensing for data risks (proactive) and also how it is being led by events and relying on institutional agility and flexibility (reactive). Demonstrating simply being aware is not the same as demonstrating your understanding of actual or potential risk and how you have eliminated it.

4. In the same way that certification programs such as ISO27000 (information security) and ISO 22301 (Security and resilience – Business continuity management systems) require a company to actually demonstrate their systems and processes work to an auditor, so your company should undertake actual breach response practice. Every person in the organization should know what to do in case of a breach and undertaking a simulated breach is the only way to have sufficient insight as t your actual readiness and preparedness.

5. The GDPR focuses on record-keeping around consent and the audit trail you need to have. Consent has got to be easy to withdraw; keep clear records of all consent taken, establish straightforward withdrawal mechanisms and regularly review procedures to keep up with any changes to processing activities. Then, as with a simulated data breach exercise, undertake a planned, detailed and documented series of consent objections, withdrawals and changes of use to create a gap analysis between your present status and where your senior management and DPO require it to be. This will also form part of your audit trail for any Regulatory audit as to how you manage your personal data.

6. Do not simply appoint a DPO. Whilst you will have a DPO working hand-in-hand with a Chief Data Officer (CDO), Chief Information Officer (CIO), Chief Information Security Officer (CISO) and other senior leadership, you may also look to appoint a Chief Privacy Officer. This role supports the others in being a champion of privacy within your organization. They will be the individual/s who interact on a daily basis with personnel in operations, ensuring that data privacy is at the forefront of their actions.

7. There is no guarantee that your data practices are in order and there is no single tool that can provide such an assurance. However, there are a number of tools that can assist with compliance, covering data discovery, consent management systems, self-assessment toolkits and comprehensive data management platforms that may be being used for alternative purposes without the realization that they can also be used for auditable proof of compliance. Undertake an analysis with your business process managers and business analysts a detailed investigation on how individual outputs or a combination of them could be utilised to create compliance support data.

8. The GDPR restricts the transfer of Personal Data to recipients located outside the European Economic Area (EEA). Understanding the appropriate use of the available lawful Personal Data

transfer mechanisms is essential for all organisations that wish to carry out transfers of Personal Data to Third Countries. These can prove tricky for your front-line operational staff to navigate, particularly in relation to ad-hoc data transfers. To facilitate transfers legally, create decision trees for staff to fully comprehend when and under what conditions such transfers are permitted. Using simple, inexpensive tools such as decision trees also enables swift changes to be made where required, without the need for additional training.

9. Develop and determine performance metrics for your GDPR program. They will demonstrate the continued improvement of the company’s Personal Data related operational practices. Examples of metrics that could be used for this include the rate of satisfactory resolution of Data Protection complaints, response times for Subject Access Requests, the audit rail as a result of a data breach being managed according to the company’s policies and procedures. These metrics inform senior management and the DPO, as well as to a Regulator.

10. Evaluate using a multi-layer approach. When executing evaluations of Personal Data related operational practices, use layers to cross-reference the data, such as: Business process owner self-assessment; Internal audit review of business unit compliance; External party audit of organisation compliance. Using this method enables benchmarking that will enable a direct comparison against previous assessments and audits, both in terms of operational compliance across business units and operational performance against peer organisations.

Companies globally are facing increasing business challenges posed by emerging data protection laws

We live today in a global digital economy that is primarily built upon the collection and exchange of data, including large amounts of personal data – much of it sensitive. With this immense growth comes a requirement for public confidence in the ability of nation state governments to protect this information. Complying with increasing data protection laws requires significant time and effort, but there are positive implications to such regulations.

The Challenge

As more countries implement data protection laws coupled with increasingly onerous limitations on the use, storage and transmission of data, so the risks of regulatory prosecution increase. Managing the shifts in the legal environment is not simply a task for a legal team or the DPO, since processes change and systems are increasingly sophisticated in the way they acquire and process data.

The Solution

Increasing litigation, greater numbers of opportunities to fall foul of a country’s data protection regulations creates the need for a dedicated activity within your GDPR program. A method labelled environmental scanning can be used to analyse what are the trends across a large number of data-related laws and create commonality of taxonomy and provisions.

Using this as a baseline within your organization can assist in forecasting what the frameworks focus on – many draw heavily upon the GDPR in their format, definitions, requirements and penalties already. There is a clear emphasis across existing and draft regulations and plotting these against business processes and data flows will make it more apparent which will require new impact assessments and which can be labelled with a lower priority, freeing up resources and budgets for managing the high risk areas.

The Regulations that May Affect Your Business

The summaries of international regulations listed below are intended solely as indicative provisions that may impact your business and in no way represent the full and detailed extent of the regulations per country.

United States of America: Jan. 1, 2020, the new California Consumer Privacy Act (CCPA) went into effect and takes a broader view than the GDPR of what constitutes private data. The California law also takes a broader approach to what constitutes sensitive data than the GDPR. For example, olfactory information is covered, as well as browsing history and records of a visitor’s interactions with a website or application.

China: A detailed national standard known as the Personal Information Security Specification (the PI Security Specification) entered into effect on 1 May 2018. This non-binding guideline contains detailed requirements on data handling and data protection. It imposes data privacy obligations on network operators and applies to all organisations in China that provide services over the internet or another information network. Additionally, under the Administrative Provisions on Information Services of Mobile Internet Application Programs (effective 28 June 2016), app providers must clearly indicate to customers if they are collecting geolocation data, accessing address books on their smartphones, or making use of cameras, or activating audio recording, or other functions, and obtain the user’s unforced consent. The Provisions also prohibit (The activation of functions unrelated to the service is also prohibited).

Singapore: The Personal Data Protection Act 2012 (No. 26 of 2012) (“PDPA”) establishes a general data protection law which applies to all private sector organisations. It sets out obligations of organisations in respect of the collection, use, disclosure, access, correction, care, protection, retention, and transfer of personal data (including transfers of personal data out of Singapore). Additionally, the Spam Control Act (Cap. 311A) (“SCA”) regulates the bulk sending of unsolicited commercial electronic messages to email addresses or mobile telephone numbers. The PDPA applies to all organisations which are not a public agency, or acting on behalf of a public agency, whether or not formed or recognised under the laws of Singapore, or resident or having an office or a place of business in Singapore

India: The Indian government finally introduced its Personal Data Protection Bill in Parliament on Dec. 11, 2019, after more than two years of fierce debate on the bill’s provisions. The country is seeking to develop a comprehensive data governance framework that would affect virtually any company attempting to do business in India. Many of the consent-related provisions in India’s data protection bill sound quite similar to those enshrined in the European Union’s General Data Protection Regulation (GDPR). A major difference though is the bid to regulate social media corporations, with the bill proposing the creation of a special class of significant “data fiduciaries” known as “social media intermediaries.” These are defined as entities whose primary purpose is enabling online interaction among users. Further, the legislation requires that certain types of data must be stored in India. “Critical personal data,” must be stored and processed only in India. “Sensitive personal information,” must be stored within India, but can be copied elsewhere provided certain conditions are met.

Thailand: The Personal Data Protection Act, or the PDPA, is a prescriptive and detailed data security regime that sets high standards for protecting personal information. It grants individuals greater rights over how their data is collected and used and equips the regulators with the power to impose heavy fines on companies for non-compliance. The PDPA is modeled after the General Data Protection Regulation (679/2016/EU).

Potentially catastrophic financial penalties for regulatory breach? – I’m insured right??

In a previous post, we mentioned that certain regulations, including the GDPR, assume guilt on the part of a commercial entity, that then has the onus placed upon it to prove innocence in order to avoid severe financial penalties.

Companies may seek to mitigate the risk of punitive fines through insuring against such negative impacts upon the business. However, it is not as clear cut as to whether insuring against this is actually a means that will result the desired management of said risks.

The Challenge

To what extent then, if any, can you insure against the effects of a data breach with the consequent impact of regulatory financial penalties?

In theory, there is value is to be found in cyber policies when it comes to covering for the costs of responding to a data breach or cyber-attack, dealing with related third party claims and complaints and repairing damaged software for example.

However, the vast majority of cyber policies will provide cover for fines and penalties “to the extent insurable by law”. This leaves the question of whether fines imposed by a data Regulator are insurable under the laws of the country concerned and this is by no means clear.

A regulator may impose a fine as a result of criminal conduct on the part of a company and this it will not be insurable. The logical path from this is that where a company is found to have intentionally, recklessly and/or negligently breached the terms of the data protection legislation, it is very likely that any subsequent fine will be uninsurable.

By contrast, it could be argued that in the case of a highly sophisticated, novel (and previously unseen by the security community) cyber-attack the company’s conduct cannot be open to criticism, it is not yet clear whether a resulting fine is recoverable since there has not been a criminally negligent action on the part of the insured.

Loss adjusters may be used, in conjunction with I.T. security specialists, to check that the insured’s systems have been maintained, secured and had the appropriate security patches and updates applied in a timely manner. A failure at this point may cause the settlement to fail entirely or in part.

In the two major UK cases of British Airways and the Marriott hotel group, the Regulator (ICO) imposed the financial penalty due to customer data being “compromised by poor security arrangements at the company”. By contrast, in the Marriott case, it was not a failure of existing operations that was the cause of the fine, but that the company had “failed to undertake sufficient due diligence” when it purchased a company which had suffered the cyber-attack(Starwood Hotel Group). In both instances, the size of the fines was such that the ICO clearly believed the failures were criminally negligent and as such these fines are more unlikely to be insurable under the applicable law in the UK.

If this is the case, then it follows that ICO fines for breach of GDPR are probably uninsurable under English law. Whether this is the case for all jurisdictions is still unclear, given the lack of cases and judicial precedent to provide guidance. Thus, in practice, there are significant obstacles to recovery since there is such a great variation in the legal and insurance position, according to various jurisdictions.

The Global Federation of Insurance Association has requested the OECD for clarity, since “there is international confusion as to the insurability of fines and penalties. OECD work to clarify this issue would benefit consumer and insurer contract certainty”.

The Solution

It is essential that companies understand fully where their exposures lie and work closely with their risk carrier to ensure there is an appropriate risk transfer solution and to have an incident response plan tested and in place.

While the insurability of GDPR fines may be limited, or even excluded following further clarity on the issue, insurance should form a part of an organization’s risk management strategy in order to mitigate the costs associated with GDPR non-compliance and resulting business disruption losses. Costs may include legal fees and litigation, regulatory investigation, remediation, other costs associated with compensation and notification to impacted data subjects and also for reputational damage requiring PR and increased advertising costs.

In principle, there may be several routes to recovering costs, such as direct indemnity claims under E&O policies, or an indirect claim against professional advisers, directors and/or employees under the respective D&O or E&O policies.

With the current uncertainty and the changes to policy limits and exclusions being ongoing, what is clear is that a comprehensive ability to provide auditable proof of compliance is certainly required of all companies.

Data Risk Foresight can assist through the implementation of our CyCalc software solution. This uses client-specific data, external data and actual threat data to extrapolate and quantify business process financial exposures. It also provides “what-if” capability in order to model scenarios and changes made to systems and processes.

Using CyCalc gives clearly demonstrable intent to comply, through being able to identify those risks and their values. The historic data plots over time your security maturity, further strengthening arguments against negligence.

Knowing risks and their financial values facilitates risk management, risk transfer and mitigation. In this increasingly regulated environment, such data has become of even greater importance.

Business continuity management now became more critical – insurers fail to pay out to policyholders.

Pre-Covid-19, many businesses believed that their commercial policies covered them for business interruptions caused by a multitude of factors, including cyber attacks, Exfiltration and/or destruction of mission-critical data. Risk carriers were willing to accept premiums to cover losses arising from an inability of a business to function. However, with 370,000 firms losing earnings as a result of the Covid-19 lockdown, insurers such as Hiscox, QBE and RSA all failed to pay out to policyholders, making a case that the policy wordings made it clear that the impact of an epidemic upon earnings was not covered.

As a result of their actions, in the UK a test case was brought before the High Court by the UK Financial Conduct Authority (FCA) with the Court ruling that policyholders were covered, based upon on a representative sample of 17 policy wordings used by 16 insurers, after an eight-day hearing.

Similarly, in the U.S. House members asked insurers to retroactively recognize financial losses relating to COVID-19 under commercial business interruption coverage for policyholders. However, as per the UK risk carriers, the U.S. insurers stated that “Standard commercial insurance policies offer coverage and protection against a wide range of risks and threats and are vetted and approved by state regulators. Business interruption policies do not, and were not designed to, provide coverage against communicable diseases such as COVID-19”.

So why is Covid-19 impacting upon the cyber insurance market? With successful cases being brought, forcing risk carriers to pay out substantial funds, they have been forced in some cases to recapitalise their businesses in order to meet the minimum capital adequacy ratios for regulatory compliance. Hiscox in London, for example, paid out $475m for cancelled events and business interruption policies to 33% of the total number of UK business interruption policies they had underwritten.

On the 3rd March 2021, the CEO of Hiscox, Bronek Masojada, admitted that the brand had suffered reputational damage from the episode and apologising: “We clearly regret the uncertainty and anguish that the dispute has caused to our customers, so it is important that we learn from this experience. The most important lesson is the need for clarity in wordings, to ensure intent is properly reflected in the policy detail. In addition, the customisation of policies has to be restricted to ensure that there is not a long tail of wordings serving very small numbers of customers.” (our emphasis)

What this entire scenario has created is an increased limitation on coverage for business interruption, whether by a pandemic, or man-made as in the case of cyber attacks. It is worth noting that in the case of cyber insurance, the vast majority of cover is provided in the U.S. and the bulk of this coverage is underwritten in Lloyds of London.

With silent cyber still a fundamental block to mass coverage of sufficient volume and level of cover, there is little incentive for a risk carrier (primary insurer, reinsurer, or other) to provide such protection when core P&C insurance products generate over 90% of profits for the industry. The Covid-19 enforced payouts have given further impetus to restrict via policy wordings or by eliminating cyber coverage for the sector.

With the above in mind, it becomes far more important that businesses have sound business continuity plans in place. Using Quantar’s CyCalc® products, businesses can map between their proprietary businesses processes, systems and various categories. This provides the means to prioritise security allocations and capital budgeting, allowing you to focus on those processes with the highest need.

Through having the financial risks at hand, CyCalc® has “what-if” functions, enabling you to model various scenarios to understand the impact upon financial risk exposures and undertake cost-benefit analysis of various mitigation options.

Quantar has been at the forefront of cyber risk management, including businesses continuity planning for over 20 years.

Ever Given Vessel Grounding in Suez Canal – A lesson of potential future cyber marine impacts

The grounding of the giant container vessel, the Ever Given in the Suez Canal had an immediate supply chain impact, coupled with the potential for substantial claims. Whilst the likes of specialist marine brokers McGill and Partners estimating losses in excess of $100 million, analysts at Morgan Stanley claim insured losses will be minimal, since there was no damage to the ship’s hull nor cargo and the refloating costs are relatively small.

Although direct insurance losses appear to have been extremely small when total losses arising from loss or damage to the vessel and/or its’ cargo, the overall claims could still be significant. With the ship grounded, totally blocking transit for 422 vessels, the supply chain losses have been estimated to be between $6-10 billion per day (Allianz estimate), with the Suez Canal Authority losing around $16 million per day in lost revenues (Refinitive estimate).

The reason for the grounding is still being investigated; however the Egyptian authorities so far have blamed the grounding on high winds and a sandstorm. Regardless of the cause of this current accident, the result was a disruption of more than 10% of global trade. According to marine analysts, such an event has been in the making for many years.

Financial implications arise from potential claims against the vessel owner and/or operator for consequential losses arising from delays in accessing the Suez Canal and may take years of litigation to resolve. The party most likely to cover such losses will be the protection and indemnity club that provides insurance through the pooled scheme covering the majority of the global fleet. This will feed into the global reinsurance market and with this being structured via a panel of providers the impact upon a single reinsurer will be manageable.

The Lesson for Cyber Impacts

Whilst the present incident appears to have been caused by naturally occurring events – high winds, a sandstorm and low visibility, the lesson should be that such instances may occur through hostile actions such as a cyber attack.

Certain geographical locations offer opportunities to threat actors, where the location of a vessel is effectively pre-determined (the alternative to the Suez Canal is a rounding of the Cape of Good Hope, adding 10 days transit time and vastly higher fuel and operating costs).

With tracking of vessels free to access online, coupled with ship-specific data, there are ever-increasing windows of attack by targeting specific IT/OT vulnerabilities. The global regulator, the International Maritime Organisation (IMO) has long recognised this and moved to instate cyber risk management requirements for all vessels as from January 2021.

However, the marine sector is one with threadbare margins and seeks to continually reduce operating costs through increased automation and outsourced provision of non-core activities. This combination makes for the likelihood of a cyber-induced major incident involving vessels of the class of the Ever Given increases over time. Using Quantar’s CyCalc® predictive cyber threat analytics can provide deep insight into a vessels vulnerabilities, its IT/OT interdependencies, as well as providing data support to fleet owners and their P&I Clubs.

What the Darkside Hacks Tell Us About Future Attack Trends

May 2021 delivered a major cyber attack on a major national infrastructure entity with a large percentage of a county’s population dependent upon it. Prior to this, a global technology corporation suffered a similar fate, with the Irish healthcare system being targeted shortly after the first two events.

In the case of the Colonial Pipeline cyber attack, the hacking group Darkside stated that they never intended to cause the negative social impact resultant from the lack of fuel across America. The group then made the claim that their sole objective was a financial one, that the group was agnostic with regard to politics and they would now implement a change to their organization to ensure all proposed attacks were signed off within the group prior to execution.

Can a hacker group claim to have a single motivation and that they both represent and control all the members within that organization? Something of a rhetorical question of course, but can this series of incidents within a short period of each other inform as to future attack trends?

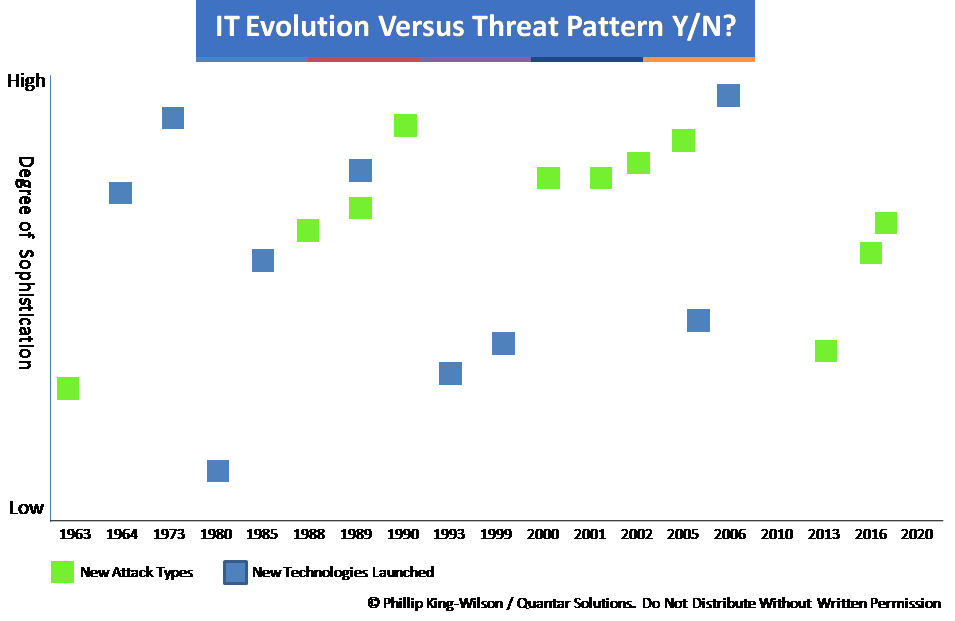

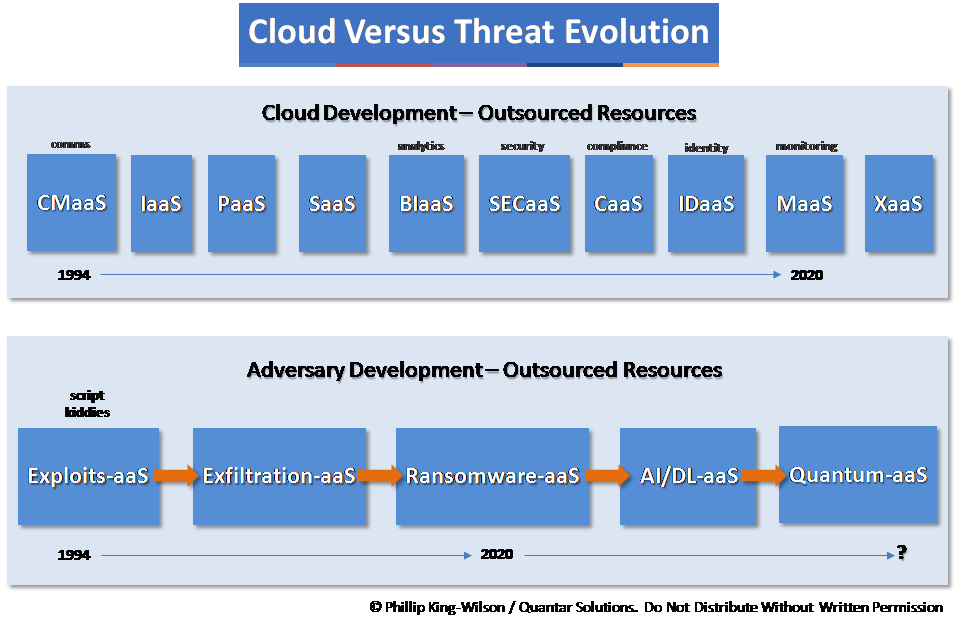

The first hack occurred in 1963, with the objective of gaining free use of telephone systems and thus for financial advantage. The first network penetration attack took place in 2008 and shortly after, the first ransomware attack in 2009; again for financial advantage.

It would thus appear from the above and the recent attacks that types of cyber attack evolve in lockstep with technology evolution. However, when reviewing technology evolution and matching it against attack evolution the picture is less clear.

The ability to automate attacks and reduce the level of skill required by an attacker in successfully executing an attack is a more recent development. At the outset of modern computing, developers were working at machine code level, one’s and zero’s. What developed as computing grew was the number of available tools for developers to work more efficiently and faster, using compilers. The ability to use a high level programming language in conjunction with a compiler enabled code to be eventually written once and run in multiple environments.

As software has evolved, so have developer tools and over generations, the ability to work at base code level has been supplanted by the ability to write either client or server software in order to meet the continual demand growth. As technologies increase in complexity, so the number of full stack developers falls in percentage terms. The effect of this is an ability of hackers, with low to moderate skills to execute attacks successfully.

With the continued motivation of cyber attacks being for financial gain, attackers and would-be hackers have utilised the same software developer tools to create highly effective hacker toolkits that require little skill to use. With organized hacking groups such as Darkside running what it effectively a franchise model, instructions are provided alongside attack tools in exchange for a percentage of the ransoms paid.

We know that Colonial Pipeline paid $5 million, with Toshiba and the Irish Government not making any statements as to whether they paid or not (the Irish representative stated no ransom had been paid “as yet”). Individuals seeking financial gain have a path to potential wealth with few international cases against hackers being made.

Covid-19 has created an additional step-change, with remote working, forced rapid digitisation of entire sectors, with security practitioners being left behind the curve in respect of personnel resources in a market with a dearth of skilled professionals.

So returning to the original question of whether the recent major attacks inform as to future trends, personal financial gain will always be a human trait. With perceived likelihood of success versus the risk of being caught and imprisoned as it stands, the probability of attackers utilising the available technologies is very high. As we move into the realm of 5G (6G has been in development since 2019), AI, increased holographic use and VR, so the threat vectors increase and offer greater attack opportunities.

As we continue our path of ever-increasing dependence on computing and outsourcing to third parties, so we also continue to build upon unsound foundations for cyber attack resilience. The vision is clear: cyber attacks will proliferate as methods, targets, skill levels, ransom payouts as long as the current regulatory, enforcement and security capabilities of all countries lag behind highly motivated individuals and organized groups.

Operational Resilience Versus Business Continuity

What Is Operational Resilience?

Commentators and software vendors propound that the impact of Covid-19 has increased the need for organizational resilience to cater for changes to working, cloud ramp-up and increasing ransomware attacks. However, there is often confusion between the terms organizational resilience and operational resilience. There is also confusion between operational resilience and business continuity. So how can we clarify the differences and what role will each increasingly play?

Taking the ISO definition of organizational resilience and its embodiment within ISO22316, it is defined as: “the ability of an organization to absorb and adapt in a changing environment to enable it to deliver its objectives and to survive and prosper”.

By contrast, operational resilience has been defined as: “initiatives that expand business continuity management programs to focus on the impacts, connected risk appetite and tolerance levels for disruption of product or service delivery to internal and external stakeholders”.

The fit between operational resilience and business continuity is that they both, along with risk management, comprise the foundations of organizational resilience. In the case of business continuity, the focus is upon the systems and processes that determine the ability to deliver products and/or services to clients. This entails a bottom-up approach to mapping and the creation of response plans covering a defined number of areas. With increasing technology integration, this frequently prioritises system and IT downtime impacts, with contingency plans and 3rd party dependency assessments.

By contrast, operational resilience has a far wider envelope than BCM and commences with a top-down approach, with client acceptance as a key driver. What will a client accept before there is lost custom, reputational damage, and customer churn. Additionally, operational resilience encompasses the overall operating environment that includes regulations, a large number of stakeholders and new/emerging technologies and their potential impact. As such, operational resilience may be better regarded as a management framework within which an organization is required to conduct its business within.

As Covid-19 has demonstrated, many firms and governments globally were clearly lacking business continuity plans, let along operational resilience programmes. The ISO: 22316 Enterprise Resilience Standard was launched in 2017 and provides a template for the creation of baseline resilience plans and yet seems to have been ignored by the majority.

Covid and has sped up changes previously anticipated and exposed frailties ranging from the global supply chain to security compromises arising from changing working practices. Incoming laws such as the E.U.’s Digital Operational Resilience Act (DORA) was formulated way before the impact of Covid and applies only to the financial services sector. However, the increased recognition of endemic weaknesses across sectors will surely lead to further laws to mandate organizations to undertake pertinent actions to increase and maintain organizational resilience – to ensure governments do not have to financially support entire sectors, and operational resilience to reduce exposure to possible, but improbable risks.

Top 10 Benefits of Building Operational Resilience

- Capital allocation efficiency: addressing risk in a proactive manner rather than high cost remedial autopsy risk management;

- Stakeholder assurance: corporate value effects e.g. governance, environment, equality;

- Higher resilience results in more agile organization to compete in dynamic operating environments;

- Creates greater organizational resiliency and fit of operations to corporate strategy;

- Greater accountability for new and emerging technologies in an era of accelerated innovation and digital attacks;

- Increasing regulatory scrutiny and volume of laws across sectors.